I RARELY LOOK at email newsletters, even the ones I've subscribed to, but in September I opened 'In The Loop' from a Berlin technology collective called Tactical Tech, and inside was a dream opportunity to build on work begun during my sabbatical.

BE AN INGENIUS FOR THE GLASS ROOM LONDON

The Ingenius is the glue that holds The Glass Room together. We're recruiting individuals who we can train up with tech, privacy and data skills in order to support The Glass Room exhibition (coming to London in October 2017). As an Ingenius you'd receive four days of training before carrying out a series of shifts in The Glass Room where you'd be on hand to answer questions, give advice, run workshops, and get people excited about digital security.

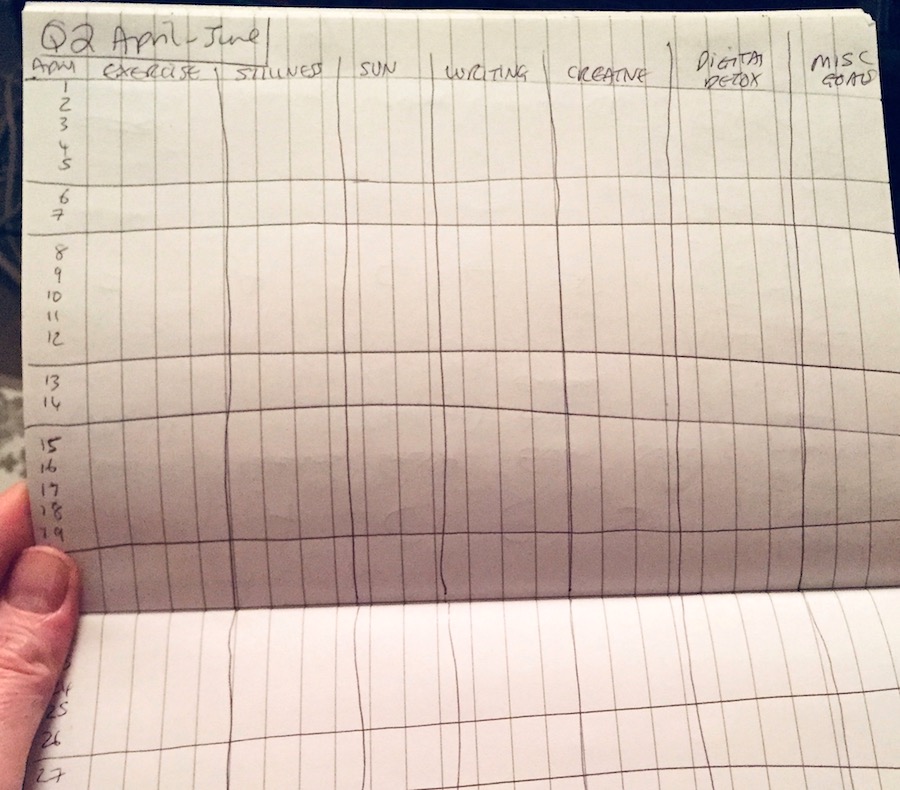

Having spent the first eight months of 2017 studying cybersecurity and cleaning up my own online practices, I had started offering free help sessions in our local café. Engagement was poor – it turns out that free infosec sessions aren't in demand because busy people tend to put these things on the backburner and just hope they don't get hacked in the meantime.

Francis Clarke, who co-runs the Birmingham Open Rights Group which campaigns around citizens' digital rights, warned me that topics like infosec and data privacy were a hard sell. Friends and family confirmed it with 'I don't care if I get sent a few contextual ads' or 'I have nothing to hide'.

So how do you get people to become aware and start to care about their online practices?

Answer: The Glass Room.

***

The Glass Room – presented by Mozilla and curated by Tactical Tech – in every way resembles a bright, shiny tech store inviting passers-by in to check out its wares. Yet another shop on a busy London street. But the items on show are not gadgets but exhibits that help people look into their online lives and think more critically about their interactions with everyday digital services.

The Glass Room – presented by Mozilla and curated by Tactical Tech – in every way resembles a bright, shiny tech store inviting passers-by in to check out its wares. Yet another shop on a busy London street. But the items on show are not gadgets but exhibits that help people look into their online lives and think more critically about their interactions with everyday digital services.

To be honest, I mostly saw The Glass Room as providing a readymade audience who were up for talking about this stuff because talking would enable me to get everything I'd been learning out of my head and also level up on my own understanding of the issues.

I didn't think I would stand a chance of being selected but I applied anyway. I've listed some of the questions from the application and my (short version) answers for a bit more context on why I started on this journey – otherwise feel free to skip ahead.

Why are you interested in becoming an Ingenius? (provide 3 reasons)

Individually – I was blown away by Edward Snowden’s revelations and the Citizenfour documentary. I have been data detoxing and self-training in infosec, and I'm very interested in the engagement tools and workshop resources.

Locally – I'm involved in several campaigns. I want to help individuals and campaigners know how to keep their data and communications private and secure.

Nationally/internationally – I'm concerned with the normalisation of surveillance (both governmental and commercial) and how the line is constantly being redrawn in their favour. I would like to understand more about the politics of data and how to think about it more equitably in terms of the trade-offs concerned with policing, sensitive data sharing, commercial data capture and the individual right to privacy.

What do you think about the current state of privacy online?

What do you think about the current state of privacy online?

I have concerns both about privacy clampdowns by governments and mass surveillance by commerce. I love the internet but find the fact that I have to jump through so many hoops to avoid being tracked or identified worrying. I feel I am part of some subversive resistance just to have control of my own data and this is intensifying as I have a writing project that I want to keep anonymous (almost impossible I since have discovered). I'm also concerned that enacting the paths to anonymity may flag me on a list and that this may be used against me at some future point, especially if there is no context in the data.

I think our right to privacy is disappearing and the biggest issue is getting people to care enough to even talk about that. We seem to be giving up our privacy willingly because of a lack of digital literacy about how our information is being used, the dominance of data brokers such as Google and Facebook (for whom we are the product), the lack of transparency about how algorithms are processing our data, and so on. The issue feels buried and those who control information too powerful to stop.

How would you take the experience and learning as an Ingenius forward?

I’ll be taking it into my local community through advice surgeries in cafés and libraries. There seems to be little privacy/security support for individuals, activists, campaigners and small businesses. I also hope it will give me the wider knowledge to become more involved with Birmingham Open Rights group, which operates at a more political level.

Finally, I aim to connect more widely online around these topics and investigate options for setting up something to help people in Birmingham if I can find suitable collaborators.

***

I'M IN!

This is one of those things that will completely take me out of my comfort zone but will also likely be one of the best things ever.

***

THE GLASS ROOM when it ran in New York City saw 10,000 come through the doors. In London, on the busy Charing Cross Road, just up from Leicester Square, the figure was close to 20,000.

THE GLASS ROOM when it ran in New York City saw 10,000 come through the doors. In London, on the busy Charing Cross Road, just up from Leicester Square, the figure was close to 20,000.

I was fretting about all sorts of things before my first shift, mostly about standing on my feet and talking to people all day – normally I sit at a desk and say nothing for eight hours that isn't typed. I was also nervous that despite the excellent four days of Glass Room training, I wouldn't know enough to answer all the random questions of 'the general public', who might be anything from shy to panicked to supertechy.

But it was fine. More than fine, it was exhilarating, like the opening night of a show you've been rehearsing for weeks. If anything, I had to dial it back so that visitors would have a chance to figure things out for themselves. The team were lovely and the other Ingeniuses supportive and funny. Most importantly, the visiting public loved it, with 100-strong queues to get in during the final weekend of the exhibition.

It must be a complete rarity for people to want to come in, peruse and engage with items about wireless signals, data capture and metadata. But by materialising the invisible, people were able to socialise around the physical objects and ask questions about the issues that might affect them, or about the way big data and AI is affecting human society.

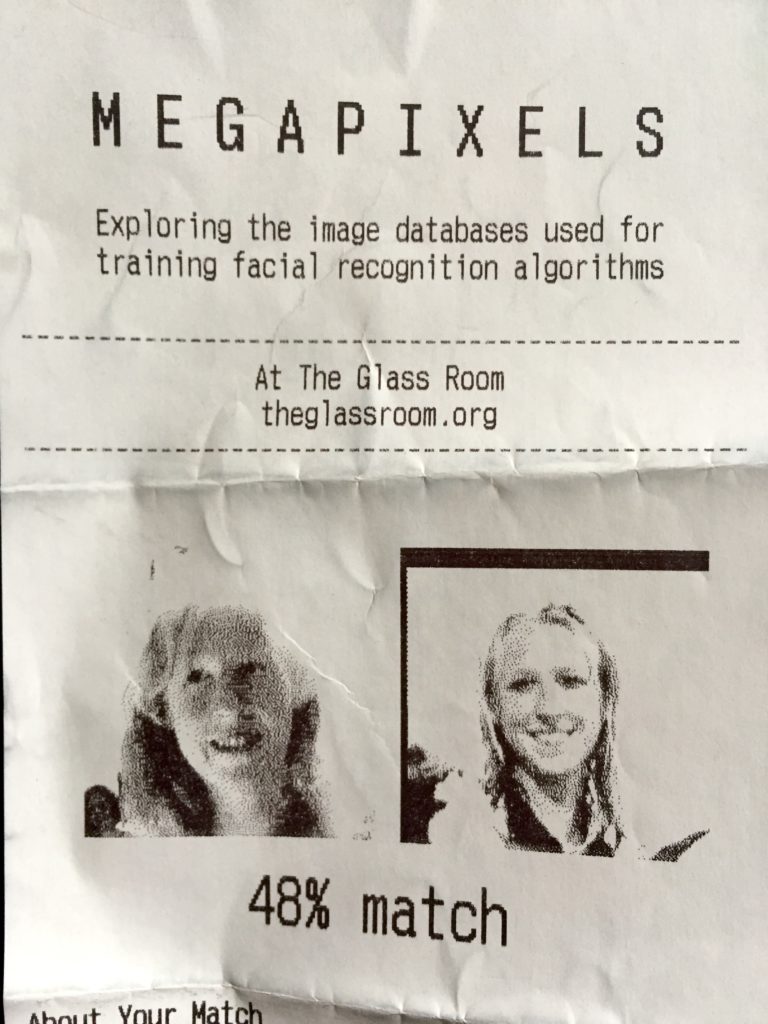

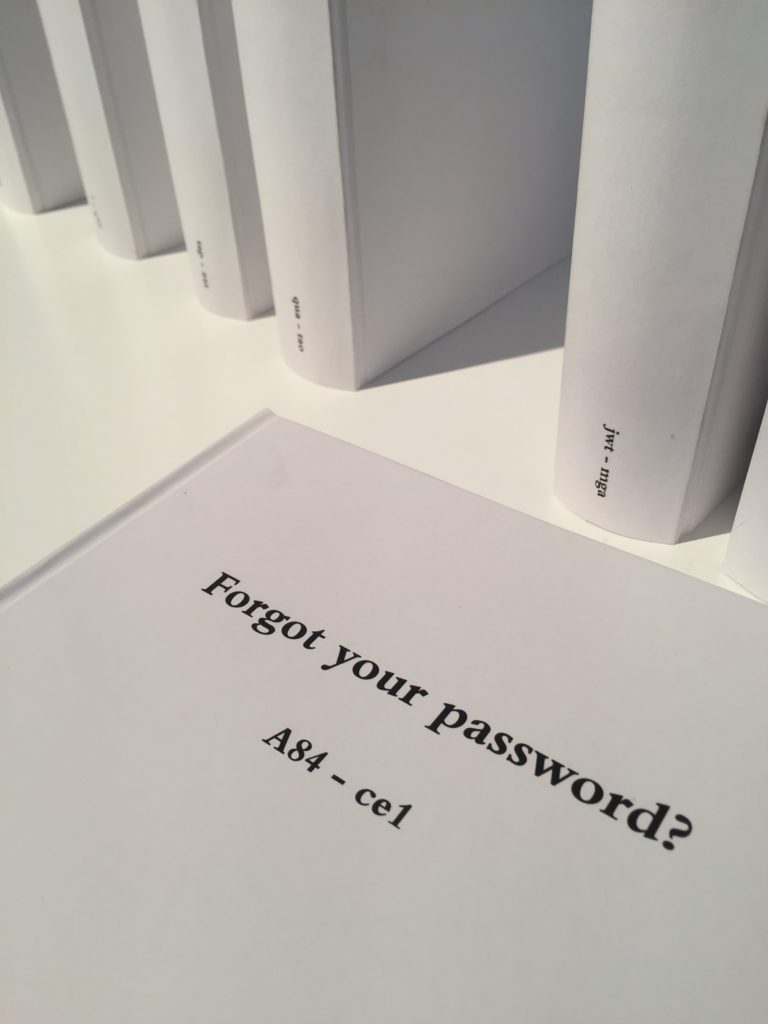

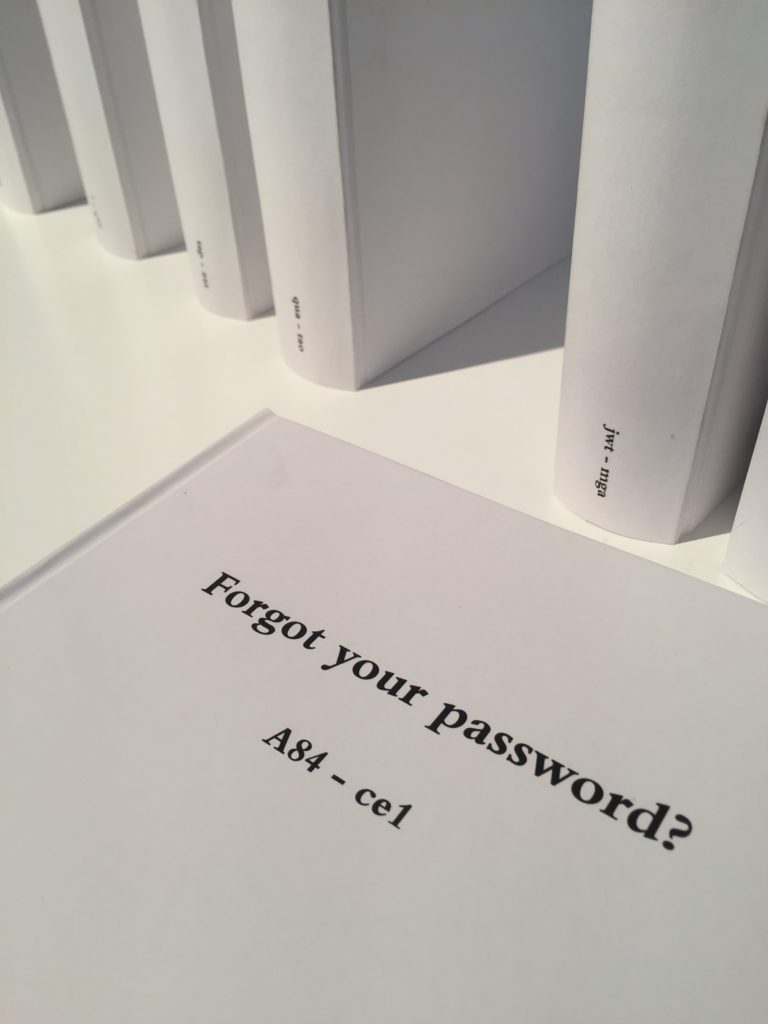

Day after day, people wandered in off the street and began playing with the interactive items in particular: facial recognition to find their online lookalikes, nine volumes of leaked passwords to find their password, newsfeed scanning to find the value of their data, the stinky Smell Dating exhibit to find out who they were attracted to from the raw exposed data of three-day-old T-shirts (c'mon people – add some metaphorical deodorant to your online interactions!).

They also spent time tuning into the trailers for highly surveillant products and brands, and watching an actor reading Amazon Kindle's terms and conditions (just under nine hours, even in the bath).

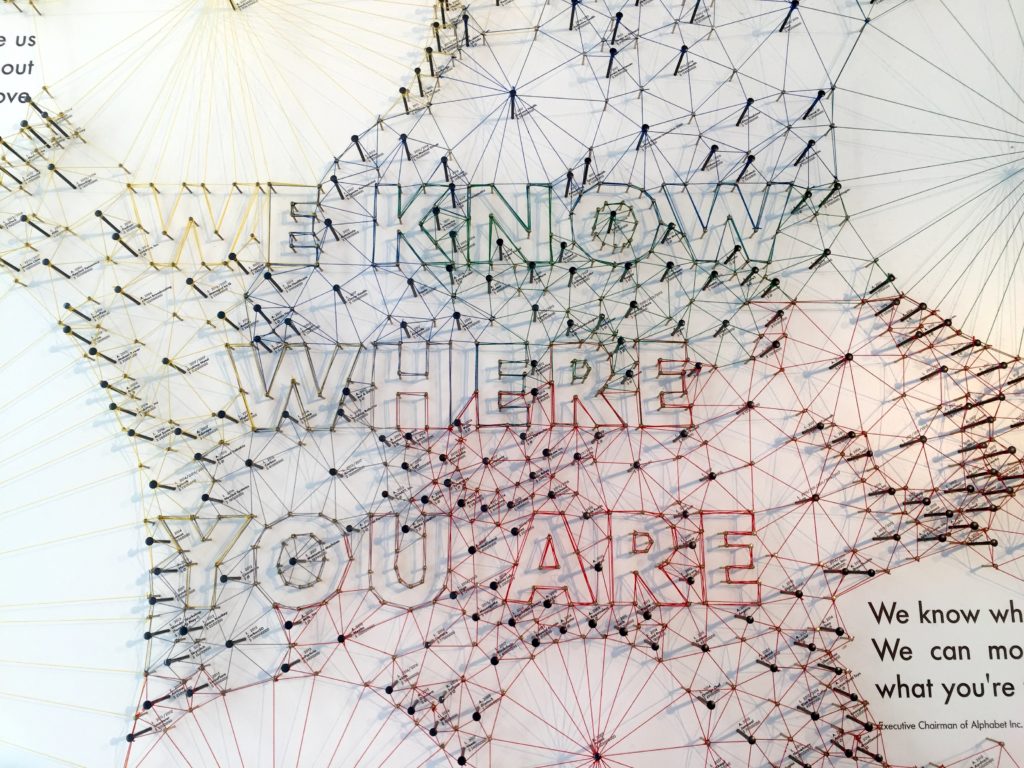

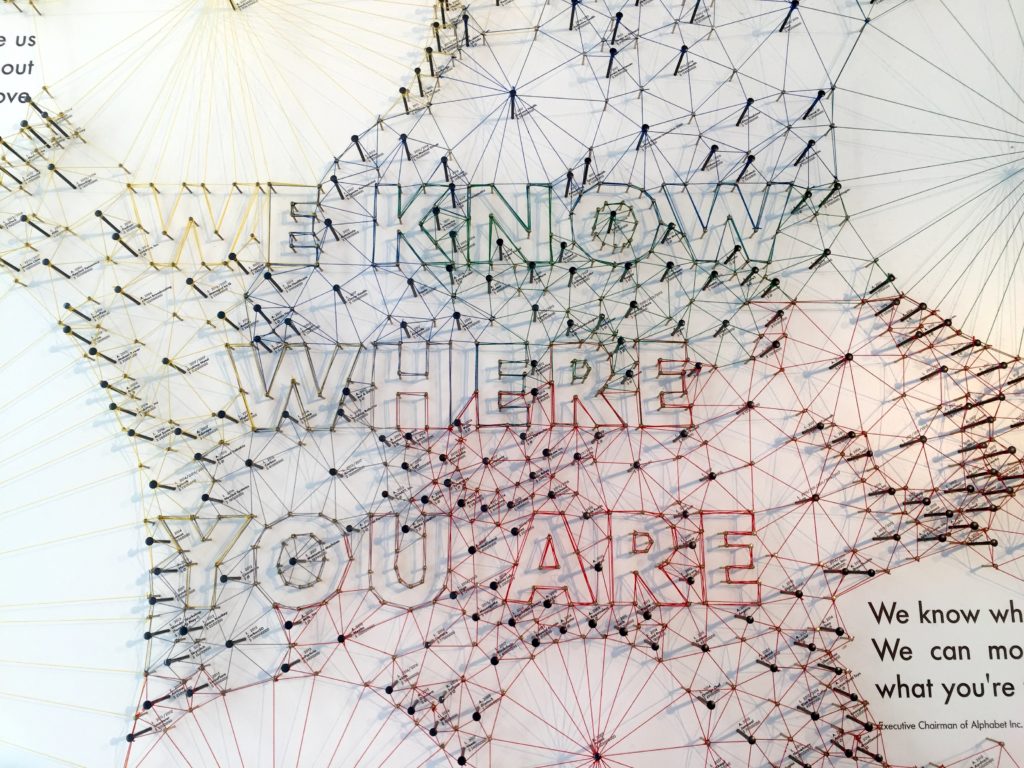

And they gathered en masse around the table-sized visualisations of Google's vast Alphabet Empire that goes way beyond a search engine, Amazon's future Hive factory run mostly by drones and other robots, Microsoft's side investment into remote-controlled fertility chips, Apple's 3D pie charts of turnover and tax avoided, and Facebook founder Mark Zuckerberg's House where you can buy total privacy for just $30 million.

***

THERE WERE THREE themed areas to explore inside The Glass Room, with three further spaces to go deeper and find out more:

THERE WERE THREE themed areas to explore inside The Glass Room, with three further spaces to go deeper and find out more:

- Something to hide – understanding the value of your data and also what you are not hiding.

- We know you – showing what the big five of GAFAM (Google, Amazon, Facebook, Apple and Microsoft) are doing with the billions they make from your online interactions with them.

- Big mother – when technology decides to solve society's problems (helping refugees, spotting illegal immigrants, health sensors for the elderly, DNA analysis to discover your roots), the effect can be chilling.

- Open the box – a browsing space on the mezzanine floor full of animations to explain what goes on behind the screen interface.

- Data Detox Bar – the empowerment station where people could get an eight-day Data Detox Kit (now online here) and ask Ingeniuses questions about the exhibition and issues raised.

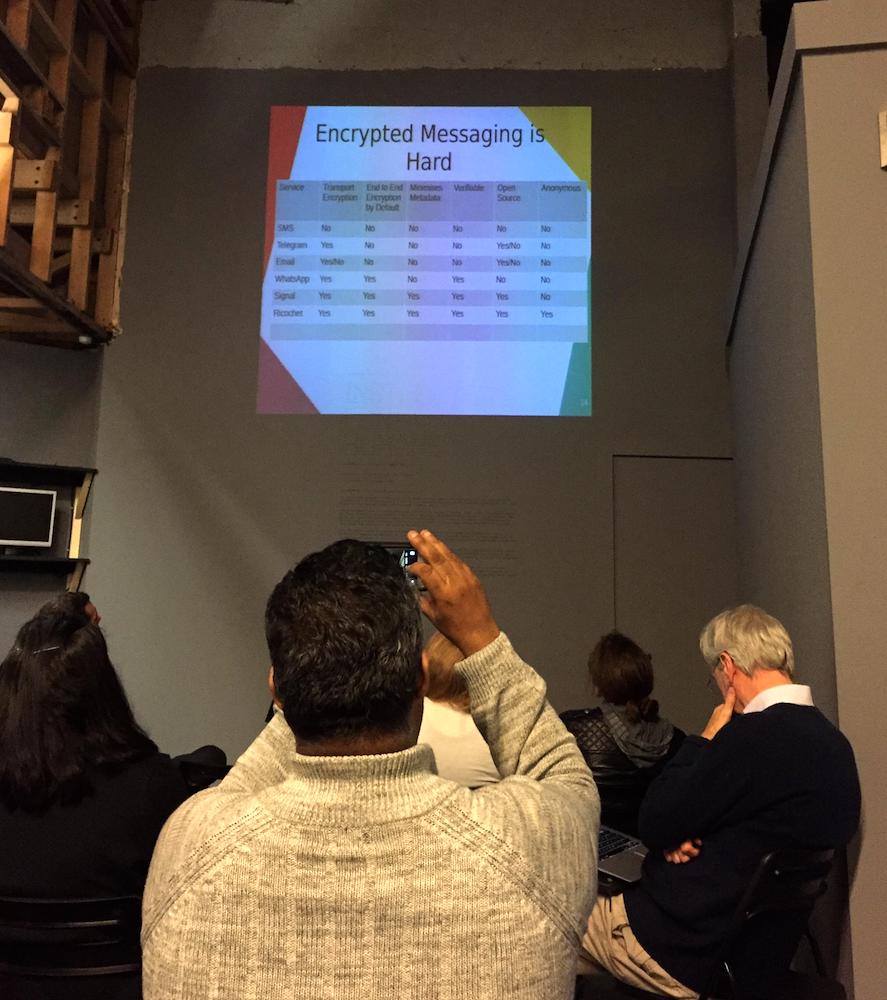

- Basement area – an event space hosting a daily schedule of expert talks, films and hour-long workshops put on by the Ingeniuses.

During the curator's tour by Tactical Tech co-founder Marek Tuszynski, what impressed me most was the framing for The Glass Room. This is not a top-down dictation of what to think but a laying out of the cards for you to decide where you draw the line in the battle between convenience and privacy, risk and reward.

I handed out kit after kit to people who were unaware of the data traces they were creating simply by going about their normal connected life, or unaware that there are alternatives where the default isn't set to total data capture for future brokerage.

Some people needed talking down after seeing the exhibition, some asked how to protect their kids, others were already paranoid and trying to go off the grid or added their own stories of life in a quantified society.

***

THERE ARE THREE LESSONS I've taken away from my experience in The Glass Room to apply to any future sessions I might hold on these topics:

THERE ARE THREE LESSONS I've taken away from my experience in The Glass Room to apply to any future sessions I might hold on these topics:

- Materialise the invisible – bring physical objects (art, prototypes, kits, display devices) so that people can interact and discuss, not just read, listen or be told.

2. Find the 'why' – most people are unaware of, or unconcerned about, the level of data and metadata they produce until they see how it is aggregated and used to profile, score and predict them. Finding out what people care about is where the conversation really starts.

3. More empowerment and empathy, less evangelism– don't overload people with too many options or strategies for resistance, or polarise them with your own activist viewpoint. Meet them where they are at. Think small changes over time.

***

IT'S BEEN A MONTH SINCE The Glass Room and I'm proud of stepping up as an Ingenius and of overcoming my own fears and 'imposter syndrome'.

IT'S BEEN A MONTH SINCE The Glass Room and I'm proud of stepping up as an Ingenius and of overcoming my own fears and 'imposter syndrome'.

As well as doing nine shifts at The Glass Room, I also ran two workshops on Investigating Metadata, despite being nervous as hell about public speaking. There are eight workshops modules in Tactical Tech's resources so it would be interesting to work these up into a local training offering if any Brummies are interested in collaborating on this.

I wrote a blog post for NESTA about The Glass Room – you can read it here: Bringing the data privacy debate to the high street.

I did the Data Detox Surgery at an exhibition called Instructions for Humans at Birmingham Open Media, and also set up a mini version of The Glass Room with some pop-up resources from Tactical Tech – there's a write-up about that here. The Ingenius training gave me the confidence and knowledge to lead this.

Leo from Birmingham ORG has also had Glass Room training so we will be looking for opportunities to set up the full pop-up version of The Glass Room in Birmingham in 2018. Get in touch if you're interested– it needs to be a place with good footfall, somewhere like the Bullring or the Library of Birmingham perhaps, but we're open to ideas.

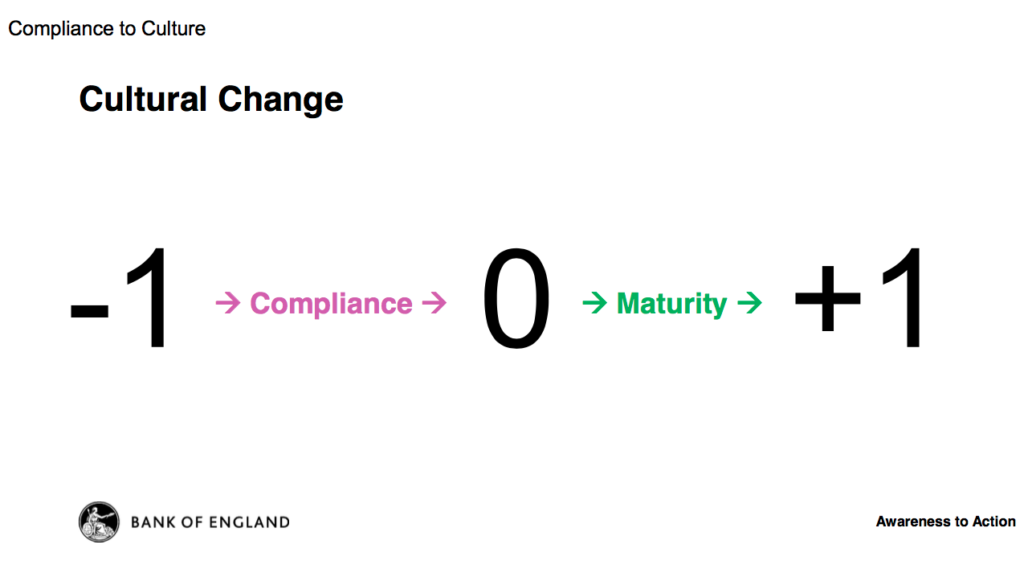

There's also a more commercial idea, which arose at the Data Detox Surgery, to develop this as an employee engagement mechanism within companies to help make their staff more cyber-secure. If employees learn more about their own data privacy and can workshop some of the issues around data collection, then they are more likely to care about company processes around data security and privacy. In short, if they understand the personal risks, they will be more security-conscious when working with customer or commercial data.

Update: In March 2018 I launched a data privacy email for my home city – you can read all about it here.

As ever, watch this space, or get in touch if you think any of this should be taken to a coffee shop for further discussion and development. You can also connect with me on Twitter if you want to follow this journey more remotely.

Thanks for staying to the end.

Hire/commission me: fiona [at] fionacullinan.com

The Glass Room

The Glass Room What do you think about the current state of privacy online?

What do you think about the current state of privacy online? THERE WERE THREE themed areas to explore inside The Glass Room, with three further spaces to go deeper and find out more:

THERE WERE THREE themed areas to explore inside The Glass Room, with three further spaces to go deeper and find out more: THERE ARE THREE LESSONS I've taken away from my experience in The Glass Room to apply to any future sessions I might hold on these topics:

THERE ARE THREE LESSONS I've taken away from my experience in The Glass Room to apply to any future sessions I might hold on these topics: IT'S BEEN A MONTH SINCE The Glass Room and I'm proud of stepping up as an Ingenius and of overcoming my own fears and 'imposter syndrome'.

IT'S BEEN A MONTH SINCE The Glass Room and I'm proud of stepping up as an Ingenius and of overcoming my own fears and 'imposter syndrome'.

Organised by:

Organised by:

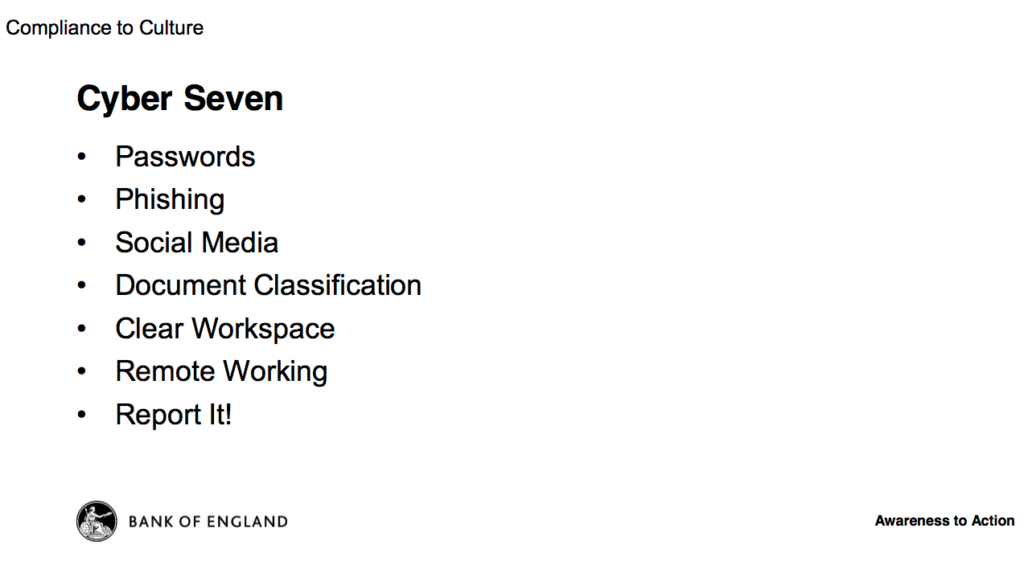

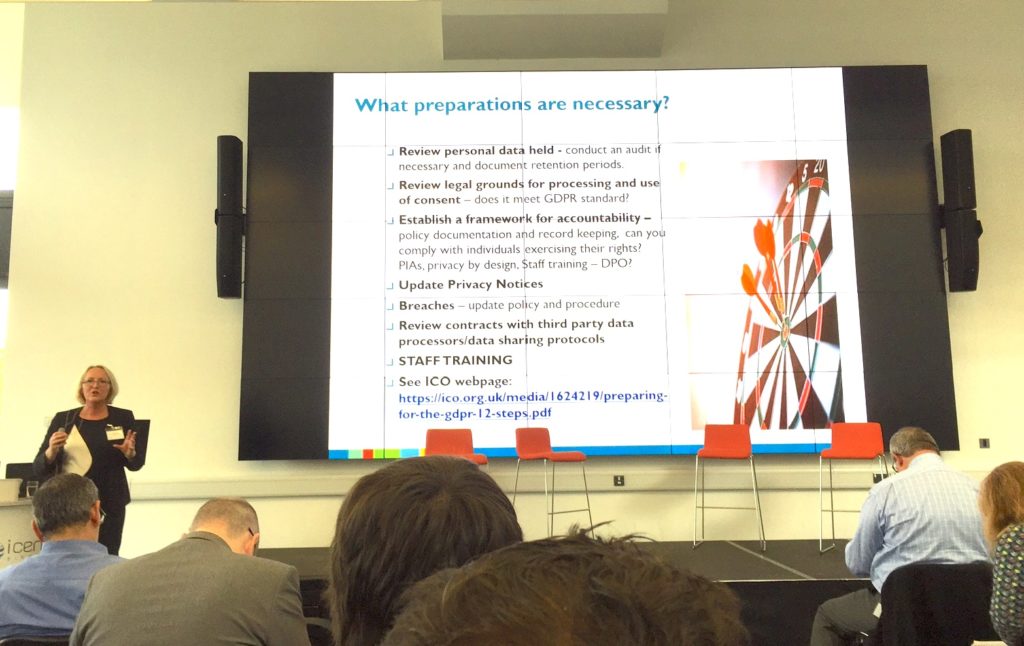

A one-day event held yesterday held at Innovation Birmingham on the Aston Uni campus to help businesses get to grips with cybersecurity. It was organised by Metsi Technologies, and supported by the National Police Chiefs' Council and Regional Organised Crime Unit (ROCU) in the West Midlands. The Twitter account and hashtag was @cybersec_uk but the backchannel was pretty quiet. Here are my notes.

A one-day event held yesterday held at Innovation Birmingham on the Aston Uni campus to help businesses get to grips with cybersecurity. It was organised by Metsi Technologies, and supported by the National Police Chiefs' Council and Regional Organised Crime Unit (ROCU) in the West Midlands. The Twitter account and hashtag was @cybersec_uk but the backchannel was pretty quiet. Here are my notes.

* and boob

* and boob